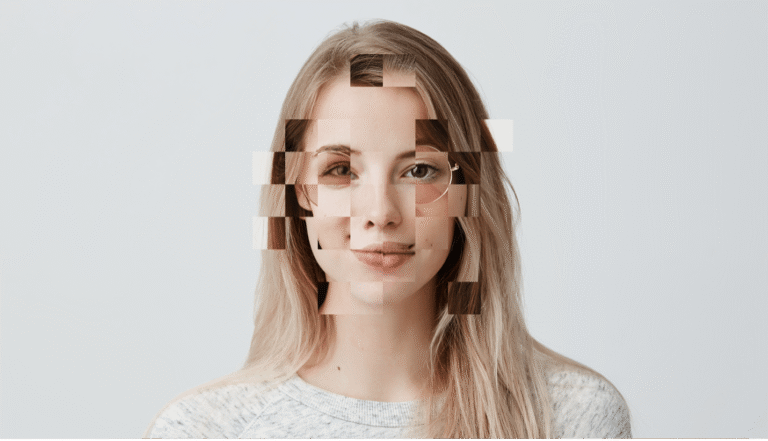

The rise of deepfake technology has transformed the digital landscape, creating new challenges for public trust, media consumption, and digital literacy. From celebrity face swaps to political misinformation, synthetic media has evolved into a powerful tool capable of influencing perception on a massive scale. This article examines the rapid mainstream adoption of deepfake tools, high-profile case studies, and the role of social platforms in the spread of manipulated content. It also explores detection methods, ethical concerns, and how users can navigate a reality where seeing is no longer believing.

Deepfake Tools Going Mainstream

Deepfake technology is no longer the exclusive domain of advanced AI researchers or specialized developers. With platforms offering drag-and-drop interfaces, users without coding skills can now create highly convincing manipulated videos in minutes. Tools like DeepFaceLab, FaceSwap, and Zao have demonstrated that production quality is no longer dependent on expensive equipment or technical expertise. This democratization of deepfake creation has accelerated its adoption, making synthetic media both a creative outlet and a potential vector for misinformation.

Viral Examples and Public Reaction

High-profile deepfakes involving Hollywood actors, sports stars, and political figures have gone viral, capturing millions of views in days. For instance, deepfaked speeches attributed to world leaders have spread widely before fact-checkers could intervene, while manipulated sports interviews have misled fans and altered public narratives. In influencer culture, creators have faced unauthorized synthetic replications that damage reputations and dilute brand partnerships, further complicating digital identity management.

Political Case Studies and Election Integrity

Political campaigns worldwide have seen the impact of deepfake videos timed to influence voter perception. During election seasons, these videos can be deployed to mimic a candidate’s voice and mannerisms, creating fabricated scandals or false endorsements. The problem is compounded by the speed at which such content spreads on social networks, often reaching millions before removal or correction.

Deepfakes in Sports and Athletic Branding

Athletes are increasingly targeted by deepfake technology, with fabricated post-game interviews or endorsements that never happened. The financial stakes are high — a misleading video can jeopardize multi-million-dollar sponsorship contracts, harm public image, and cause contractual disputes. Sports organizations now face the dual challenge of protecting both live event footage and the digital likeness of their players.

The Role of Social Media in Spreading Synthetic Content

Social media algorithms are designed to prioritize engaging content, and deepfakes often deliver high engagement through shock value and novelty. This algorithmic bias accelerates the spread of synthetic media, ensuring that a manipulated clip can go from niche forums to mainstream timelines in a matter of hours.

In regions where misinformation spreads rapidly online, the availability of regulated digital platforms — from media to entertainment — helps users build trust. The same goes for curated entertainment hubs like some Indian betting sites, where verified access and compliance help separate real platforms from misleading lookalikes.

Platform-Level Responses and Content Moderation

Major platforms like Meta, X (formerly Twitter), and YouTube have implemented policies to label or remove synthetic media, especially when it poses risks to election integrity or individual safety. These efforts include partnerships with AI companies developing detection systems, although keeping pace with the evolution of deepfake technology remains a challenge. Transparency reports now include statistics on removed or flagged synthetic content, reflecting the growing scale of the issue.

Ethical Concerns in Synthetic Media Creation

The ethics of deepfake creation extend beyond legality. Even when produced for satire or entertainment, synthetic media can exploit individuals without consent, breach privacy rights, and blur ethical boundaries in storytelling. Legal frameworks are still catching up, with some countries introducing criminal penalties for malicious use of synthetic likenesses.

Tools and Browser Extensions for Detecting Manipulated Media

Users now have access to browser extensions and detection tools capable of identifying anomalies in video and audio files. Services like Deepware Scanner, Sensity AI, and Microsoft’s Video Authenticator analyze metadata, pixel inconsistencies, and compression artifacts to flag potential manipulations. As these tools improve, they provide critical resources for journalists, educators, and everyday internet users seeking to verify online content.

Digital Literacy as a Defense Mechanism

Educating users on recognizing manipulated media is a key strategy against misinformation. Training programs now emphasize critical thinking, source verification, and reverse image searches as everyday skills. Schools, NGOs, and media outlets are partnering to integrate digital literacy into broader educational initiatives, creating more resilient online communities.

The Future of Digital Identity in a Synthetic Age

As deepfake realism continues to improve, the challenge will shift from detection to establishing systems of verified authenticity. Blockchain-based watermarking, biometric authentication, and decentralized content verification could become standard in digital media. Maintaining a trustworthy online identity will require both technological safeguards and public awareness, ensuring that authenticity remains a cornerstone of the digital experience.

0 Comments